Walk in the Past

Experience London Bridge as it was in the past, a bustling hub of activity, with towering gates and busy workshops. The application aims to transport users to this time period, providing them with a sense of the sights, sounds, and daily life on the bridge. Through this experience, users will have the opportunity to gain a deeper understanding of the historical significance of the bridge and its evolution over time.

Today the area is a remnant of itself, with the bridge being rebuilt in the 20th century to meet the needs of the modern city, its once iconic status has been overshadowed by the Tower Bridge, which it is always being confused with.

The app aims to allow people to walk in the same spot as London Bridge but as it was 400 years ago, using augmented reality and historical data to create an immersive and educational representation of its rich history.

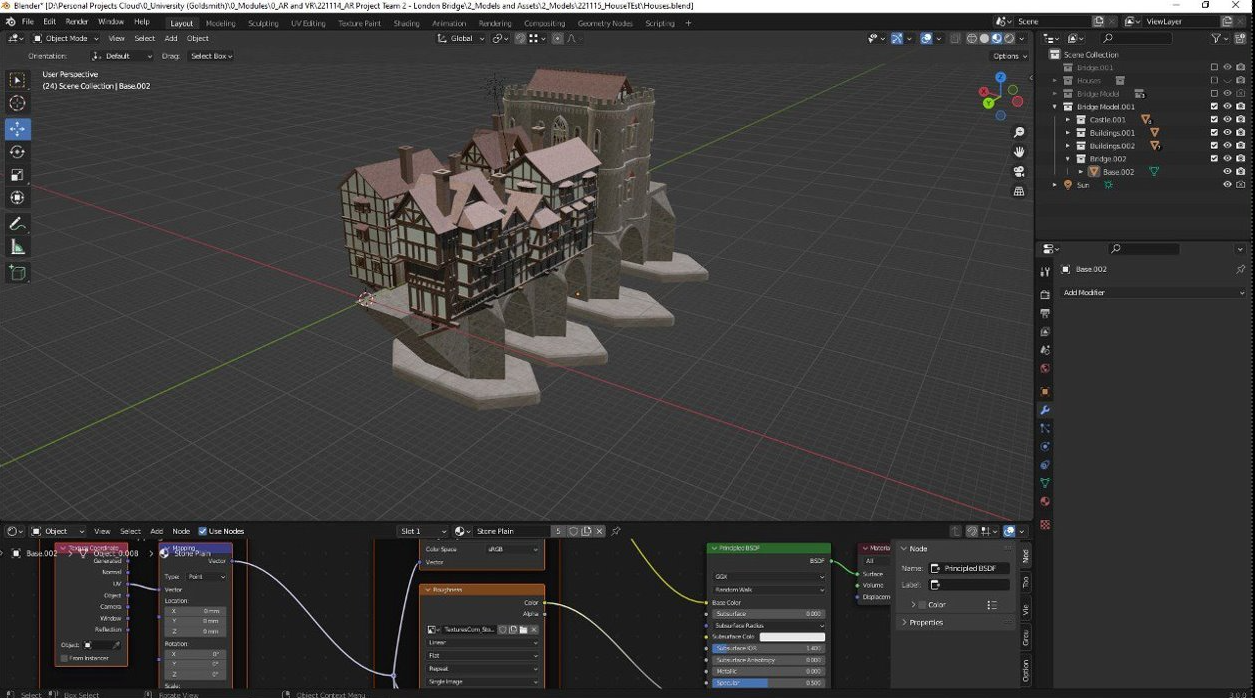

In order to present the past in the real world it had to be recreated Digitally first. The model itself is based on the paintings and historical records of the bridge as well as publically available 3D reconstructions. The model has been made in Blender, with a focus on making it as light as possible whilst at the same time showcasing as much information as possible. As the ability of our phones to render large scenes is still quite limited, we made a design decision to recreate the bridge up to the first Gate, and anything beyond that would not be 3D.

Looking at the tourist and architectural aspects of London, we have landed on the idea of recreating a piece of the past that people can interact with on a large scale in an around the center of London. Referencing other historic apps, we noticed most of them are not site-specific and could be used anywhere, however, we thought that the anchoring of the project to a real-world location could make it unique and add the experience of space and history that those other apps couldn’t. So our aim was to explore the site and understand how we could create such a unique educational expereince.

Brief

Site

The site is extremely busy as its one of the few pedestrianized crossings between the City and South Banks, with tens of thousands of people using it for their commute or tourism. Trying to understand how the people would use the AR application we spent time analyzing the area and walking around it to identify a spot from which the user could begin the experience.

London Bridge Street Sign Image Target, it worked great as it was of high contrast and unique enough to be used with AR.

View of the beggning of the London Bridge and the begging area of our expereince.

There were other signs that could have been used as image targets, however they were limited in number or location.

We identified the corner areas of the bridge as the beggning spots for the experiences, as they would allow people to keep walking along the brdige as well as having streets signs that we could use as image targets to activate the expereince.

The site was also 3D scanned in order to get the space and dimensions right.

User Journey

As the user opens the app he is greated with a short brief and invitation to continue.

Next page has and location and clear instructions of how to find and opereate the AR experience.

Once the camera has been opened all the user has to do is find the sign and point the camera at it, and it will load in the scene.

User is free to walk around the scene, as it has been instantiated and wont be lost due to phones internal gyroscopes.

Signage and View Tracking

Animated Creatures

3D Spatial Sounds

Application Features and Walkthrough

The backbone of the app is the image and plane tracking done by AR Foundation libraries in Unity. The images we captured are used by AR Foundation to locate and scale the scene, in order to get the immersion right. Then the spatial tracking starts and allows the user to freely roam the space and be tracked exactly relative to the digital scene.

Working in Unity also allowed implementing of a variety of immersive elements such as animations and 3D spatial sounds, as the app uses a scaled scene it can know exactly what amount of sounds each creature or part produces and how much the user can hear. As well as animated creatures and people that add depth and activity to the scene, rather than just a static space.

My role in the project focused on AR development in Unity and 3D modeling, working on coding the application and integrating the scale model of the London Bridge over real-world space.

Assankhan Amirov

One of the crucial aspects of the app we wanted to implement was takins screenshots within the app, so people could find interesting views and things they discovered and take a picture of them straight away.

Code and Contribution

<public class Screenshot : MonoBehaviour

{

public GameObject UI;

private IEnumerator TakeScreenshotAndSave()

{

yield return new WaitForEndOfFrame();

Texture2D ss = new Texture2D( Screen.width, Screen.height, TextureFormat.RGB24, false );

ss.ReadPixels( new Rect( 0, 0, Screen.width, Screen.height ), 0, 0 );

ss.Apply();

// Save the screenshot to Gallery/Photos

NativeGallery.Permission permission = NativeGallery.SaveImageToGallery( ss, "GalleryTest", "Image.png", ( success, path ) => Debug.Log( "Media save result: " + success + " " + path ) );

Debug.Log( "Permission result: " + permission );

// To avoid memory leaks

Destroy( ss );

UI.SetActive(true);

}

public void ScreenshotButton()

{

UI.SetActive(false);

// Take a screenshot and save it to Gallery/Photos

StartCoroutine( TakeScreenshotAndSave() );

}

}Another interesting interaction design that I implemented was the Barrels displaying information when you looked at them. We discussed how best to present information to the user when they wanted to know more about something, and rather than big billboards with lots of text I created a script that would follow the user and reveal relevant information when looked at in order to drive immersion and interactivity.

public class Gaze : MonoBehaviour

{

List<InfoBehaviour> infos = new List<InfoBehaviour>();

void Start() {

infos = FindObjectsOfType<InfoBehaviour>().ToList();

}

void Update() {

if (Physics.Raycast(transform.position, transform.forward, out RaycastHit hit)) {

GameObject go = hit.collider.gameObject;

if (go.CompareTag("hasInfo")){

OpenInfo(go.GetComponent<InfoBehaviour>());}

} else { CloseAll();

} }

void OpenInfo(InfoBehaviour desiredInfo){

foreach (InfoBehaviour info in infos){

if (info == desiredInfo){

info.OpenInfo();

} else { info.CloseInfo();

} } }

void CloseAll() {

foreach (InfoBehaviour info in infos){ info.CloseInfo();

} } }Most of the time was spent on 3D modeling, the breakdown of which is shown at the top of the page, and the implementation of the model in Unity.

Horse can be seen at the end of the model to prevent people from going too far back, it is also animated and has sounds that fade away as you walk further away from iit.

Probably the most striking imagery is the contrast between the large scale newly built buildings with the olden time ones, their different architecture and size make you realize how much the area has changed.

Another aspect that I would have loved to work more on and would given more time is to make each shop and house unique filled with things related to the hsitory of the site for the user to explore.

Screenshots

From our initial and quite ambitious brief, I think we have captured the essence of what we wanted to achieve, that is the feeling of the old environment and the immersive experience of discovering something that was. In order to achieve that, during the production process, the bridge 3D scene had to become smaller and more compact, but that allowed us to focus on making just that initial part more thought-out. The addition of smaller details, such as sound design, camera interactions, and animations, enhanced the experience, which was a valuable lesson for me as an AR developer. It’s never just about the visual aspects of the project, but rather the whole sum of the ideas and implementations that the user experiences in his hands.

Given more time and I would have added more interactive elements for the users, like an avatar guide that would be voiced and could go over the space with you, which would anchor people’s experience and direct them if they never used AR before. On a similar note, most of the reviews from passers-by that we have asked to try out our app were that they wanted more of the bridge, to go further and into the middle to see the rest of London.

The most challenging part was definitely making sure that all of the elements worked together without breaking the immersion of the app, if one task worked after user input, another would break and skew the final result. Its the attention to detail that allows the people to enjoy the AR tour, any imperfection or slight miss alignment of the models will be noticed, and break the immersion.